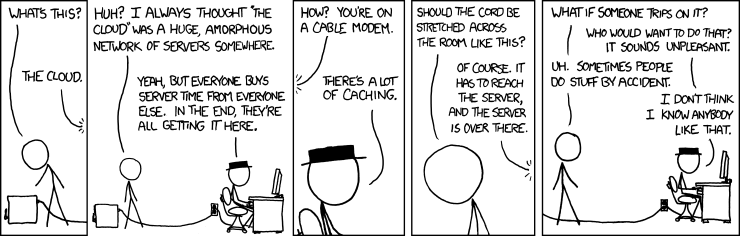

Image credit: XKCD

What happened?

Amazon Web Services experienced a service disruption for 8 hours and 33 minutes on Sunday, September 20, 2015, from 2:19 AM PDT to 10:52 AM PDT. The outage, which affected its US-EAST–1 North Virginia location, began with a network disruption that significantly increased error rates on Amazon’s DynamoDB (NoSQL database) service. This led to a cascade whereby some 37 other AWS services would begin to falter and show increased error rates for API requests.

Among these were the AWS SQS (Simple Queue Service), EC2 (Elastic Compute Cloud), CloudWatch (resource monitoring), and Console (browser-based management GUI) services. Nearly every AWS service in the North Virginia region was affected in some capacity during those early Sunday morning hours.

It’s only once Amazon’s engineers throttled down the throughput on their APIs that they were could add more capacity and begin to restore their service function to normal levels. Fortune and Venture Beat offer accessible reports on the outage. For the technically inclined, AWS’ post mortem illuminates the process by which the failure cascade occurred and was ultimately resolved. This timeline by Richard I. Cook is also rather accessible:

The cascade seems to have extended beyond the initial outage, as some customers continued to report issues launching EC2 instances two days after the main disruption.

Who was affected?

What’s fascinating about this outage is the number of web services affected that are used by people every day. Among those who experienced disruptions in Amazon’s North Virginia location were Netflix, IMDB, Tinder, Airbnb, Nest, Medium, Pocket, Reddit, Product Hunt, Viber, Buffer, SocialFlow, GroupMe, and Amazon’s own Instant Video and Echo services.

The reaction

Some were surprised that so much of the Internet runs off of Amazon’s cloud. Others reminded us that, with AWS providing over 90% of the cloud compute capacity being used among the top 15 IaaS providers[1], as an industry we are becoming over-reliant on a single cloud vendor:

“Single cloud lock-in via APIs is real danger w/ API copyright. I fear recreating the 90s era Microsoft with a single vendor owning the interface for modern computation.” — Joe Beda (@JBeda), September 22, 2015

While AWS’ DynamoDB doesn’t have a stated SLA, one person on Twitter quipped:

“If DynamoDB can avoid further problems, they can be back on track for their five nines SLA in just 60 short years” — Dennis (@Opacki), September 20, 2015

Others found humour in the situation:

This is what I assume #AWS #AWSOutage looks like right now..

— Jess Bahr (@JessaBahr), September 20, 2015

Ian Rae, CEO of CloudOps, finds it no real surprise that this issue occurred in Amazon’s US-EAST region:

"North Virginia is the ‘black hole’ of cloud compute. It was the first AWS region and everybody wants to be there. If someone is building a web app and they want to integrate with ten other SaaS providers hosted on Amazon, they’ll go to Virginia because that’s where they’ll get the lowest latency.

“Historically, US-EAST–1 has been the first location to launch new features, and they were always running out of capacity[2]. The fact is that Amazon can’t even know what the next issue is that they’re going to run into in North Virginia. This situation is a perfect example of how things can cascade in a complex system when something goes wrong.”

What does this mean for cloud computing?

Amazon outages are nothing new, and they happen from time to time[3]. When they do, some people freak out, others say that nothing has changed, and those who care most about robust architectures emphasize the importance of designing for failure with multiple regions and multiple clouds.

Along those final two points, there is a lot to learn from how Netflix was able to handle this recent disruption. TechRepublic wrote an excellent report on how Netflix was able to ‘weather the storm’ by building systems that are designed to withstand even the most unpredictable of failures:

Helping the service to weather the service disruption was its practice of what it calls “chaos engineering”.

The engineering approach sees Netflix deploy its Simian Army, software that deliberately attempts to wreak havoc on its systems. Simian Army attacks Netflix infrastructure on many fronts - Chaos Monkey randomly disables production instances, Latency Monkey induces delays in client-server communications, and the big boy, Chaos Gorilla, simulates the outage of an entire Amazon availability zone.

By constantly inducing failures in its systems, the firm is able to shore itself up against problems like those that affected AWS on Sunday.

In that instance, Netflix was able to rapidly redirect traffic from the impacted AWS region to datacenters in an unaffected area.

Brandon Butler at Network World writes how Amazon is leading the way by sharing its formulas with the industry:

[Some] argue that Netflix is turning into one of the most important cloud computing companies in the industry, not only by proving that a company making $3.7 billion annually can run some of its most critical workloads in the public cloud, but also by sharing with developers how it’s being done and providing others with a path to follow.

Some aren’t so convinced that Netflix is such a great leader with respect to industry best practices. Joe Masters Emirson of Information Week argues that Netflix is setting a bad example with its over-reliance on AWS. With its “disregard for next-gen best practices” and large influence in the open source community, Joe says that Netflix is leading us towards vendor lock-in and away from the focus we should be placing on using a multitude of IaaS providers in a multi-cloud world — what he calls “Cloud Computing v2.0”.

How to protect yourself

In the end, there are five major options in how you manage and configure your cloud infrastructure to avoid and/or minimize downtime in an outage like this:

- Design your application to fail over to multiple availability zones

- Design your application to fail over to multiple regions

- Use a cloud infrastructure designed with more redundancy at the hardware level, like cloud.ca [4]

- Build a hybrid cloud setup (private + public cloud) and use public cloud for failover and burst only

- Design your application to span multiple clouds — the multi-cloud option

A good example of this is Peerio, an end-to-end encryption company, who uses cloud.ca and AWS as part their multi-cloud strategy. Read the case study here.

There are some other options too, most notably the default option of doing nothing. If you don’t plan for availability zone or region failure which will occur, you should have a clear idea of how much this downtime will cost you.

I will go into the above 5 choices and their pros and cons in more depth in my next blog post. Stay tuned if you’re an AWS user looking to bolster your infrastructure and interested in hybrid clouds and multi-cloud setups...

Follow us on Twitter and LinkedIn to receive all the latest updates from the cloud.ca blog!

If you're interested in a highly available available Canadian cloud infrastructure, sign up for a free 7-day trial of cloud.ca today!

-

Gartner’s report, published on the 18th of May, 2015, states that AWS “is the overwhelming market share leader, with over 10 times more cloud IaaS compute capacity in use than the aggregate total of the other 14 providers in [their study]”. It’s important to point out that this is not in comparison to the entire market, but the top 15 cloud IaaS providers. There might, in fact, be a very long tail of regional cloud IaaS providers, like cloud.ca. ↩

-

AWS now rotates the regions in which they choose to launch new features to keep one from being at an advantage (or disadvantage, depending on perspective). It has also made great efforts to increase its capacity in the region. TechTarget recently wrote an article outlining how the various AWS regions compare in terms of availability and user perception. ↩

-

AWS experienced four outages in 2012 alone, though their track record has been much better since then. When there is an outage now, people often comment that “it feels like the old days”. ↩

-

Amazon builds its infrastructure with single points of failure in each of its Availability Zones. While Amazon recommends that you maintain your application’s availability by failing over to multiple Availability Zones or regions, cloud.ca is designed to maintain the highest possible availability for an instance in a single zone. ↩